Spam on the Web, how to protect the site and how Google preserves Search

Spam still remains an open problem, both in the daily experience of Web users and for Google, which over the years has intensified its work to combat this annoying (and dangerous) phenomenon. Among the latest examples is the creation of a new section in the Quality Guidelines, called “Preventing Abuse on Your Site and Platform,” which offers specific guidance in particular on how to protect a site from user-generated spam, one of the most frequent plagues encountered in the holding business. In fact, for some time now, Google had already launched a full-fledged crackdown on spam, complete with threats to publishers and site owners who do not protect their pages from UGC spam because, as also reiterated with the release of the Webspam Report 2021, the numbers are still high and affecting the Search system, making it necessary to front directions to protect sites and, consequently, the search engine from misleading, useless and often dangerous results.

Google’s directions for avoiding site spam.

In Google’s experience, the main avenues for spammers to enter a site are “open comment forms and other user-generated content inputs,” which allow malicious actors to generate spam content on a site without its owner’s knowledge; hosting platforms can also be susceptible to illicit behavior, and in general spammers could create a large number of sites that violate quality standards and add little or no value to the Web.

Fortunately, Google’s new guide tells us, “avoiding abuse on your platform or site generally isn’t difficult“, and deterring spammers could take “even simple deterrents, such as an unusual challenge that users must complete before interacting with your property.”

Specifically, there are at least six possible interventions that can prevent or reduce the presence of spam on the site, namely:

- Inform users that you do not allow spam on your service

- Identify spam accounts

- Use manual approval for suspicious user interactions

- Use a block list to prevent repetitive spamming attempts

- Block automatic account creation

- Monitor your service for abuse.

Interventions to reduce the activity of spammers

Google’s first piece of advice is to explicitly communicate to users that we do not allow spam on the service by publishing clear rules about unlawful behavior for users, such as during the registration process. Also, the guide adds, it might be useful to allow trusted users to report content on the property that they consider spam.

The second suggestion is a practical one and calls for investigating a way to identify which accounts are spammers in action on the site by keeping records of registrations and other user interactions with the platform and trying to identify typical spam patterns, such as:

- Date and time of form completion

- Number of requests sent from the same IP address range.

- User agents used during registration.

- User names or other form values chosen during registration.

These indicators could help us create a user reputation system, useful both for engaging users and identifying spammers. Since many comment spammers want their content to be featured in search engines, the guide says, a good practice is to “add a robots noindex meta tag to posts by new users who are not known within the platform” – after a certain period of time, when the user has built up a good reputation, we can allow their content to be indexed. This method particularly discourages spammers from interacting with the platform. Also, since spammers are often motivated to leave a link to their site, it can be useful to add a rel nofollow or ugc attribute to all links in untrusted content.

An effective way to fight spam, although it requires an additional amount of work and adds a “load to your daily activities,” is to provide a manual approval (or moderation) system for some user interactions: in this way, we prevent spammers from immediately creating content that could be spam, and its effectiveness is why, for example, comment moderation is an integrated feature in most CMSs.

Another practice suggested by Google is to simplify the removal of any other profiles similar to those already identified as spammers: concretely, this means, for example, that if several profiles containing spam come from the same IP address, we can add this address to a definitive list of banned addresses, using plugins such as Akismet on various CMSs (e.g., WordPress) or by providing the IP address to the firewall’s list of disallowed types.

Again to discourage rapid publication of spam content, then, a basic solution such as introducing reCAPTCHA or similar verification tools into the registration form can also be effective, so as to enable only requests from people and prevent automated scripts from generating numerous sites on the hosting service.

Finally, the last point in Google’s guide reminds us of the importance of monitoring the service for possible illicit behavior, paying particular attention to three aspects:

- Monitor the property for indicators of spam such as redirects, large amounts of ad sections, specific keywords containing spam, and large sections of hard-coded JavaScript code. The site: search operator or Google Alerts can help detect problems, Google explains.

- Keep an eye on web server log files to detect sudden spikes in traffic.

- Monitor the property for phishing and malware-infected pages. On the practical front, we can “use Google’s Safe Browsing API to regularly test URLs on your service,” and the guide also recommends considering alternative ways to check the status of the service: for example, “if you choose to target mainly users in Japan, what are the odds of thousands of interactions from an Italian IP occurring overnight on your property?” Several tools are available to detect the language of new sites, such as language detection libraries or the Google Translate v2 API.

The commitment to a safe and spam-free search experience

To reiterate Google’s commitment to making the Search experience “safe and spam-free” is an article signed by Cherry Prommawin, Search Relations, and Duy Nguyen, Search Quality Analyst, which summarize the results of work done in 2020 on the search engine for “keep your results clean on Google and offer results from high-quality websites created by you instead of those containing spam”.

But this goal cannot be achieved without everyone’s collaboration, including site owners, because “a safer and spam-free Web and Google Search ecosystem start with well-built websites”. And that is why Google is constantly working to provide better information, tools and resources to simplify website creation with the best user experience possible.

Spam prevention: numbers, tools and Google resources

The numbers allow us to understand the amount of work that requires this task: only in 2020, Google sent “over 140 million messages to site owners in Search Console, an increase compared to the previous year”.

And if it is true that the increase has been largely determined “from new sites that use Search Console, from announcements of new features to help site owners during the COVID-19 emergency, as well as from useful information and notifications on potential problems that might have affected the appearance of their site in the Search”, however, a substantial part concerned notifications of manual actions for spam.

As many as 2.9 million messages have covered this problem, even if today most of the situations are solved through algorithms and not with manual action, in decrease compared to past years: for example, in 2018 there were 4 million notifications of manual actions and in 2017 even 6 million.

These communications contain “useful information for site owners who have suffered a breach, with indications to promptly address security issues and minimize interruptions and damage to users”.

The Search Console team has worked “tirelessly throughout the year” to offer features that can help us detect spam or unsafe behavior on websites, such as the Disavow link tool, a new version of the scan statistics report and the Safesearch filter report. The team has also worked on features that can “help create websites with high quality content, which is vital to improve the ecosystem and reduce spam: for example, we passed the beta version of Rich Results Test to better support developers working with interesting types of Google media results such as Events or Jobs”.

Increased also the resources that Google makes available to everyone: on the educational side, for example, the article cites the guide to the use of the Search Console series that allows you to “get the most from the tools we provide” and remember the migration of all the company’s blogs to centralize resources, merged into the new Google Search Center. Finally, to ensure better support, the company has launched a new support channel to report persistent security issues caused by malware, hacks or malicious downloads, revealing that it has received a wide range of reports that have then resolved positively for site owners.

The community’s involvement

In 2020 there were also nearly 40 thousand posts submitted by site owners in the Search Central support community, which continues to provide support to questions and to solve problems, proving able to “help companies of all sizes to improve their online presence or appear for the first time”.

On the practical front, Google has expanded the global community to include in 2020 Arabic, Chinese (simplified), Polish and Turkish and now supports 17 languages.

In addition to five in-person events that it was possible to organize in early 2020, over the next few months Google found new ways of meeting and hosted over 50 online events and held more than 80 online office hour sessions; In addition, it launched Search Central Lightning Talks and Product Summits, accessible from the comfort of home, and launched podcasts Search Off the Record and the first Virtual Webmaster Unconference.

How to protect the site from spam

In addition to Google’s efforts, site owners also need to do their part to help create a safer and spam-free web, and a second article of the Search Quality Team focuses precisely on practical indications to avoid in advance that there may be unwanted violations.

One element not to be overlooked is the constant updating of softwares, with particular relevance to important security updates, because “spammers can take advantage of security problems in previous versions of blogs, message boards and other content management systems”.

The direct advice is to use some complete anti-spam systems such as Akismet, which “have plugins for many blogs and forum systems that are easy to install and carry out most of the work of fighting spam” automatically; in addition, for some platforms “reliable and well-known security plugins are available, which help ensure the website and may be able to detect abuse in advance”.

The problem with UGC spam

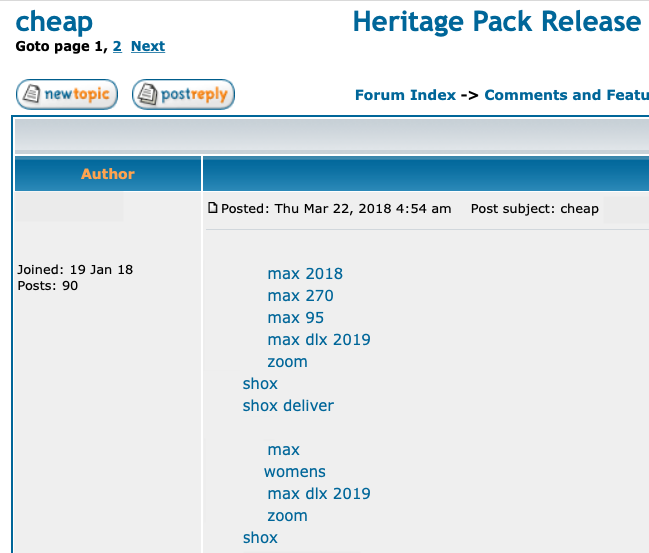

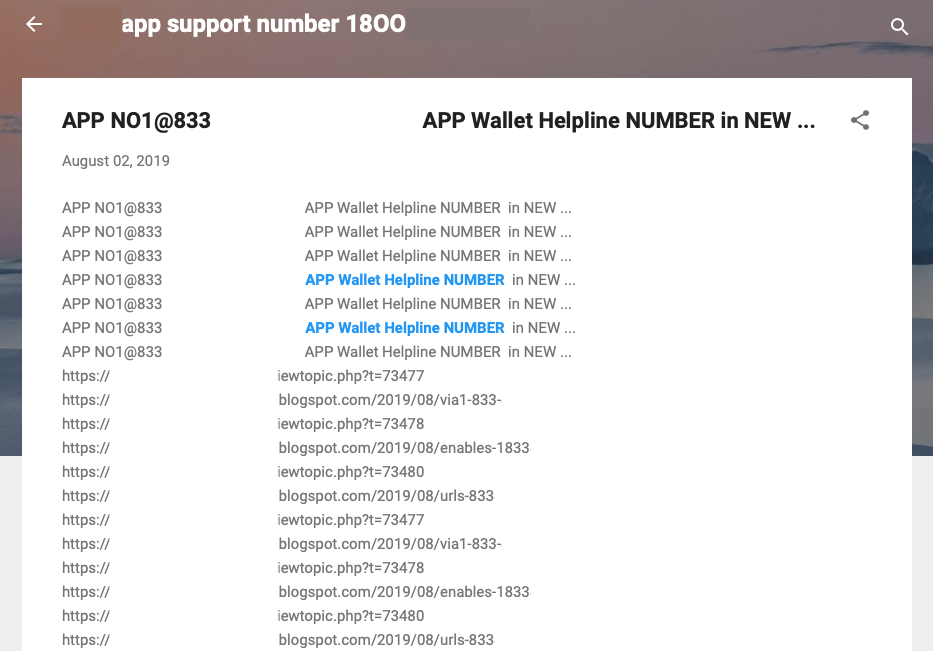

One of the most urgent problems is UGC spam, which is generated by users in the possible channels of interaction on sites: forums, guestbooks, social media platforms, file uploaders, free hosting services or internal search services.

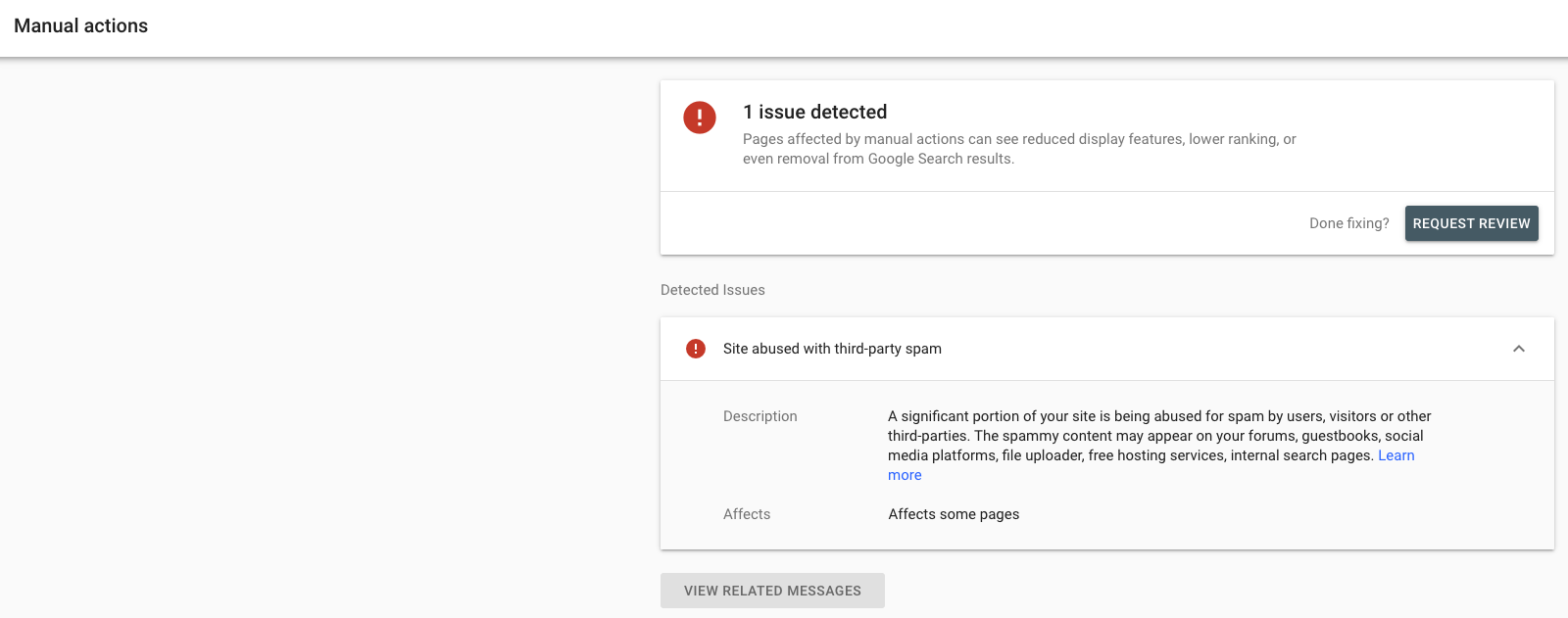

Spammers, in fact, “often take advantage of these types of services to generate hundreds of spam pages that add little or no value to the web“, exposing the same site to a risk: according to the principles set out in the Instructions for Google webmasters, in fact, these situations could involve the adoption of manual actions by Google on the pages involved.

Spam content of this kind can be harmful to the site and users in several ways:

- Low-quality content in certain parts of a website may affect the positioning of the entire website.

- Spam content can potentially lead users to unwanted or even harmful content, such as sites with malware or phishing, which can reduce the reputation of the site.

- Unintentional traffic from unrelated content on the site can slow down the site itself and increase hosting costs.

- Google could remove or demote pages invaded by third-party spam generated to protect the quality of overall search results.

Suggestions to block spammers on the site

The article then offers a number of practical tips to prevent spammers from accessing and abusing a site.

- Prevent automatic account creation

When users create an account on the site, it would be better to implement a free Google CAPTCHA service or similar verification tools (eg: Securimage or Jcaptcha) to allow the request only from real people and prevent automatic scripts from generating accounts and content on the public platforms of the site.

Another solution is to require new users to validate registration through a real email address, because this can prevent many spam bots from automatically creating accounts. Again, we can set filters to block suspicious email addresses or from email services we do not trust.

- Activate moderation functions

It is useful to enable moderation features to create comments and profiles, which require users to have a certain reputation before links can be published. If possible, we recommend that you change your settings so that you do not allow anonymous posting and submit new user posts for approval before they are publicly visible.

- Monitor the site to find spam content and clean up any issue

Google suggests to use the Search Console to monitor the situation of the site, in particular, by checking the Security Issues report and the Manual Actions report to check if any problems have been detected and the Messages panel for further information.

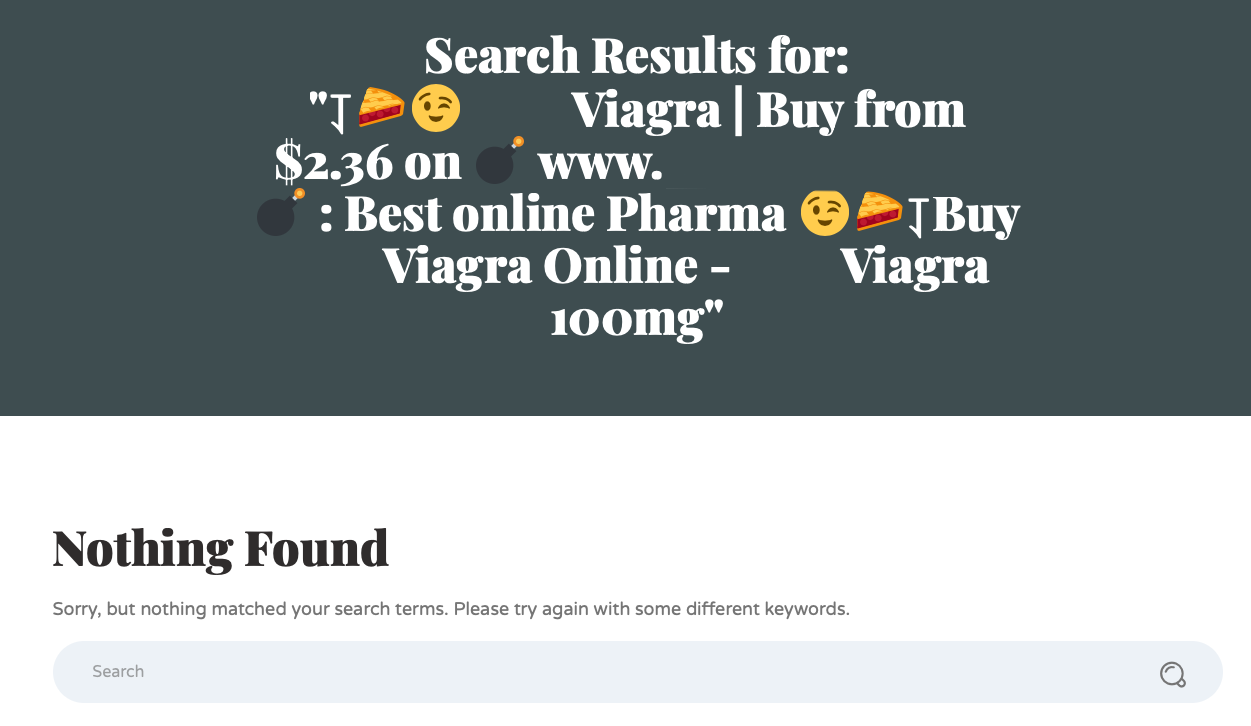

In addition, it is good to periodically check the site for unexpected content or spam using the site operator: in Google Search, along with business or adult keywords that are not related to the topic of the site. For example, the article says, “searching for [site:your-domain-name viagra] or [site:your-domain-name watch online] allows you to detect content that is not relevant on the site”, and in particular:

- Out-of-context text or out-of-topic links with the sole purpose of promoting a third-party website or services (e.g., “download/watch free movies online”).

- Meaningless or automatically generated text (not written by a real user)

- Internal search results in which the user query appears off topic with the purpose of promoting a website / third-party services.

Another tip is to monitor the log files of the web server to identify sudden traffic spikes, especially for newly created pages, focusing for example on keyword Urls in URL patterns that are completely irrelevant to the site. To identify potential high-traffic problem Urls, we can use the Pages report in Google Analytics.

Finally, we may block in advance the posting of obviously inappropriate content on our platform with a list of terms containing spam (e.g.: streaming or download, adult, gambling, drug-related terms) using features or built-in plugins that can delete or mark these contents as spam. In this sense, Google recalls that on Google Alert you can set a notice as [site:your-domain-name spammy-keywords], using commercial or adult keywords that we do not expect to see on the site, and which forwards “Google Alert is also an excellent tool for detecting compromised pages”.

- Identifying and closing spam accounts

The web server log can also be used to study users’ registrations and identify some classic spam patterns, such as:

- A large number of registration forms completed in a short time.

- Number of requests sent from the same IP address range.

- Unexpected User Agents used during registration.

- Meaningless usernames or other meaningless values sent during registration. For example, commercial usernames (names like “Free movie downloads”) that don’t look like real human names and refer to unrelated sites.

- Prevent Google Search from showing or following unreliable content

If our site allows users to create pages such as profile pages, forum threads or websites, we may discourage spam abuse by preventing Google Search from showing or following new or unreliable content.

For example, we can use meta noindex to block access to untrusted pages, or disallow in robots.txt to temporarily block pages. We can also mark as UGC links to user-generated content, such as comments and forum posts, using rel=”ugc” or rel=”nofollow”, so as to explain to Google the relationship with the linked page and request not to follow that link.

- Reinforce the open platform content in a focused file or directory path

With automated scripts or softwares, spammers can generate a large number of spam pages on the site in a short time; some of these contents can be hosted in fragmented file paths or directories, which prevent website owners from detecting and cleaning up spam effectively.

Examples of this are:

example.com/best-online-pharma-buy-red-viagra-online

example.com/free-watch-online-2021-full-movie

It is therefore advisable to consolidate user-generated content in a concentrated file or directory path to facilitate spam maintenance and detection. One recommended file path is:

example.com/user-generated-content-dir-name/example01.html

example.com/user-generated-content-dir-name/example02.html

Preventing spam on the site in order to avoid manual actions

Ultimately, then, the two articles confirm that Google is continuing its battle against spam in search results and that everyone has to do their part.

Site owners, in particular, are called to monitor the security of their pages, also because the risks in case of violation are quite high.

What is currently an “advice” to monitor pages and avoid UGC spam may also turn out to be a kind of early warning about Google’s intention to push with greater insistence on manual actions such as “deterrent” to the presence of content, links and spam user profiles on the sites.